A Compact Dynamic 3D Gaussian Representation for Real-Time Dynamic View Synthesis

Abstract

3D Gaussian Splatting (3DGS) has shown remarkable success in synthesizing novel views given multiple views of a static scene.

Yet, 3DGS faces challenges when applied to dynamic scenes because 3D Gaussian parameters need to be updated per timestep, requiring a large amount of memory and at least a dozen observations per timestep.

To address these limitations, we present a compact dynamic 3D Gaussian representation that models positions and rotations as functions of time with a few parameter approximations while keeping other properties of 3DGS including scale, color and opacity invariant. Our method can dramatically reduce memory usage and relax a strict multi-view assumption.

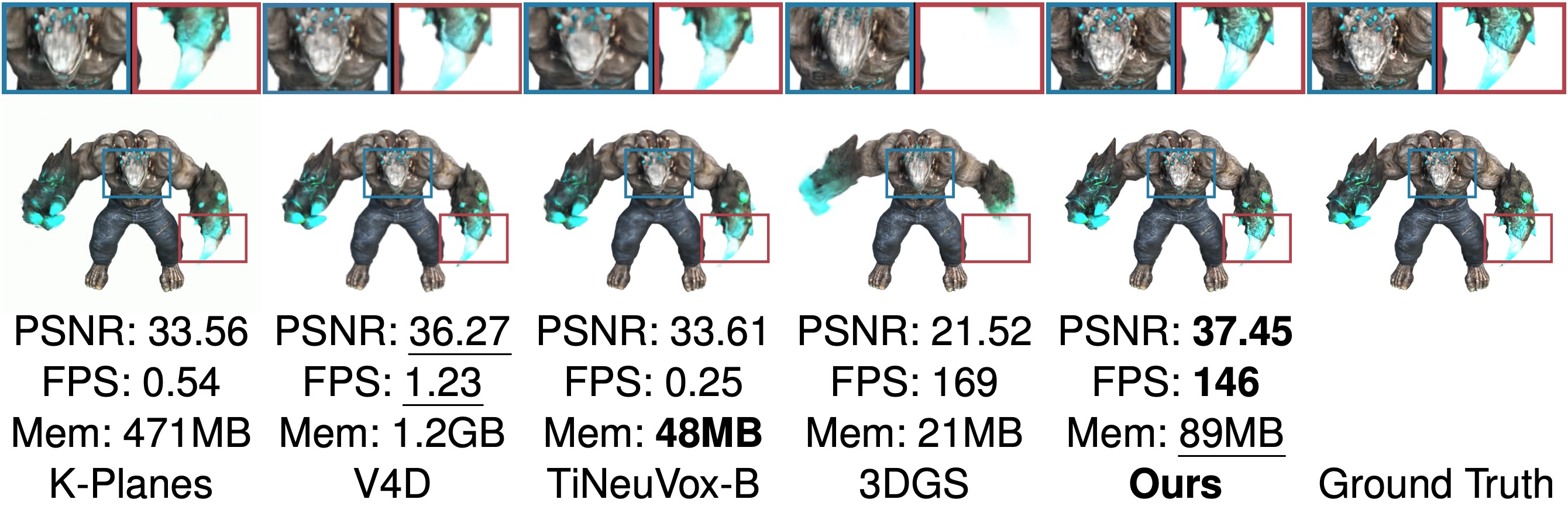

In our experiments on monocular and multi-view scenarios, we show that our method not only matches state-of-the-art methods, often linked with slower rendering speeds, in terms of high rendering quality but also significantly surpasses them by achieving a rendering speed of 118 frames per second (FPS) at a resolution of 1,352x1,014 on a single GPU.

Results

Related Links

We express our gratitude for the seminal papers that introduced 3D Gaussian Splatting and Dynamic 3D Gaussians.

There's a lot of excellent work that was introduced around the same time as ours.

4DGS also addresses a dynamic extension of 3DGS.

There are probably many more by the time you are reading this. Check out survey papers on 3DGS (Guikun Chen and Wenguan Wang;Ben Fei, et al.; Tong Wu, et al.), and MrNeRF's curated list of 3DGS papers.

BibTeX

@inproceedings{katsumata2024compact,

title={A Compact Dynamic 3D Gaussian Representation for Real-Time Dynamic View Synthesis},

author={Katsumata, Kai and Vo, Duc Minh and Nakayama, Hideki},

booktitle={ECCV},

year={2024},

}